- October 30, 2023

- By AXM

- 0 Comments

The Foundation Model Transparency Index: Assessing the Openness of Leading AI Models

Source: Introducing The Foundation Model Transparency Index

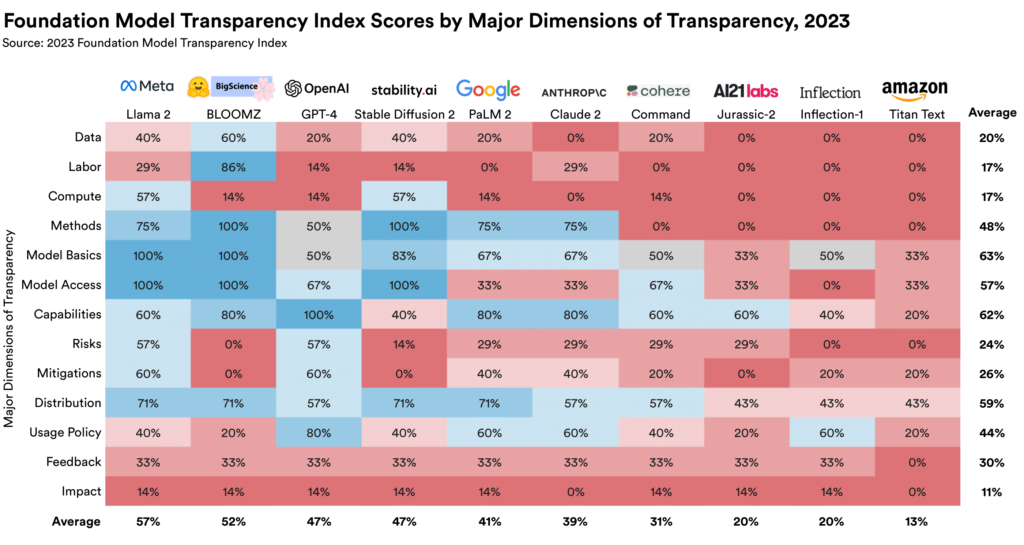

A new 100-point index reveals a startling lack of transparency among major foundation model developers like OpenAI, Meta, and Google. Created by researchers at Stanford, MIT, and Princeton, the Foundation Model Transparency Index (FMTI) evaluates companies on 100 indicators related to how they build, operate, and distribute their AI models. The inaugural 2023 FMTI finds the industry has a long way to go on transparency.

Models like GPT-3, GPT-3.5 and DALL-E 2 are used by millions but provide little visibility into their inner workings. This opacity mirrors issues with social media platforms and other digital technologies lacking transparency around key practices. The FMTI aims to quantify and track transparency issues in the foundation model field to enable better policymaking and industry norms.

The index covers three major domains: upstream practices like what data and compute resources are used, properties of the model itself, and downstream issues like user policies and controls. Each domain is further split into 13 subcategories with multiple indicators like training data sources, energy use, capabilities and limitations, and opportunities for user feedback.

Scoring 10 major players against the 100 FMTI indicators reveals an industry falling far short on transparency. The top score was only 54/100, earned by Meta for its Llama language model. The average score was a mere 37/100. However, the fact that at least one company satisfied 82 of the 100 indicators shows substantial room for improvement via adopting best practices.

Open source models like Llama and Hugging Face’s BLOOM actually scored higher than proprietary alternatives like Google’s PaLM and OpenAI’s GPT-4. The more transparently disclosed details about training data, compute resources and other upstream practices. Closed models offered little visibility into these inner workings.

The Biden administration has already elicited voluntary transparency commitments (I wrote about these too in a separate article) from major tech firms. The FMTI provides a benchmark to evaluate progress on these pledges. It also informs major AI governance initiatives like the EU’s upcoming AI Act. The FMTI researchers are engaging directly with companies and governments to advocate for transparency norms and legislation.

Labour Fairness

A fair AI system requires fair treatment of the human labour involved throughout its development. Key aspects of labour fairness include disclosing where human labour is used across the data pipeline, the organizations employing these data “labourers,” and their geographic distribution. Wage transparency is also critical – are data annotators and content moderators paid a living wage? Are the instructions given to AI workers and protections like mental health support disclosed? Overall, human labour is essential for building AI systems, from data collection and annotation to red team testing. But frequently this reliance on ghost work to power AI is opaque. Shedding light on human labour practices through transparency indicators can enable accountability and illuminate if producers are responsibly managing this invisible workforce.

Labour fairness spans additional dimensions like whether employees or contractors can collaborate and grow skills in their roles. But foundational transparency around the use of human labor, employment relationships, locations, wages, instructions, and protections is needed first. The use of contract content moderators has often been shrouded in secrecy while several third-party CRM giants have pulled out from the market altogether due to labour actions in several new jurisdictions. Disclosure on these basic fronts, even if imperfect, can catalyze scrutiny, audits, and pressure for improved labour practices. Transparency indicators bring these issues into the spotlight.

Mitigating ‘systemic’ risks and intentional spread of harm

Responsibly deploying an AI system requires rigorously evaluating and transparently disclosing its potential risks. Key risks include unintentional harms like biases, toxicity, and fairness issues as well as intentional misuses like fraud, disinformation campaigns, and cyberattacks. A responsible AI developer should clearly describe these risks and provide illustrative examples to demonstrate them. Quantifying risks through technical evaluations before release and externally reproducing these evaluations are critical for accountability. Both first-party and independent third-party auditing of risks should occur.

- There is a strong assumption in many current AI policy proposals that audits will be conducted internally by the companies developing the AI systems. Proposals tend to focus on specifying audit procedures and requirements, rather than enabling meaningful external oversight.

- Internal audits come with inherent limitations and conflicts of interest. When audits are controlled and paid for by the auditee, the quality and meaningfulness for accountability can be compromised.

- External oversight by third parties like academics, journalists, regulators etc has proven immensely valuable in uncovering issues with AI systems so far. However, there is less policy focus on facilitating such external auditing.

- Overall, the current landscape over-indexes on internal assessments, while meaningful accountability requires a robust external auditing ecosystem. Lessons from non-AI domains show the importance of enabling independent third-party audits, not just internal checks.

The EU has developed a robust, consultative, set of regulations for independent audits under the requirements of the Digital Services Act for VLOP/VLOSE (Very Large Online Platforms/Search Engines). The DSA requires annual independent audits of very large platforms and search engines to assess compliance. Delegated Act establishes rules to ensure rigorous, comparable audits through requirements on auditor independence, access to platform data and systems, audit methodologies and reporting.

AXM

I am a Systems Engineer turned community organizer. I offer 5 years of strategic leadership and teamwork. My 8+ years in internet service ventures and project management include chairing global conferences.